Decentralized Zero-Knowledge Machine Learning: Implications and Opportunities

The continued evolution of blockchain technology has opened up a world of potential possibilities for decentralized systems, changing the landscape of various industries, including finance and content creation. One of the most exciting developments in this realm, in our opinion, is the emergence of decentralized Zero-Knowledge Machine Learning (ZKML). ZKML goal is to revolutionize these industries by seeking to provide data privacy, enhanced security, and a democratized approach to data usage and control. Let’s dive in.

Understanding Decentralized Zero-Knowledge Machine Learning

Before delving into its implications, it’s important to understand what ZKML is. Fundamentally, ZKML helps enable algorithms to learn from data they cannot see. It utilizes zero-knowledge proofs, cryptographic methods where one party can prove to another that they know a value, without conveying any information apart from the fact they know the value.

In the context of machine learning, this allows the model to learn from the data without the data ever being exposed. This can be particularly useful in a decentralized setting, where data privacy and security are paramount.

Decentralized ZKML is a cutting-edge technology that combines the fields of machine learning (ML), cryptography, and decentralized systems. It builds upon the foundations of zero-knowledge proofs (ZKP), a cryptographic technique for proving the possession of certain information without revealing the information itself, and applies this concept to machine learning processes in a decentralized setting.

ZKML operates by training ML models on data spread across different nodes in a decentralized network. The nodes can then generate zero-knowledge proofs about their data. These proofs allow the nodes to confirm certain properties or features of the data without disclosing the data itself.

For instance, in a ZKML-powered healthcare system, different hospitals (nodes) could collectively train a machine learning model on their patient data without sharing the patient records themselves. Each hospital could generate a zero-knowledge proof that confirms the data’s validity, enabling the model to learn from the whole dataset without compromising patient privacy.

The beauty of ZKML lies in its ability to harness the collective intelligence of decentralized networks while respecting individual data privacy. It brings together the power of machine learning, the privacy of zero-knowledge proofs, and the resilience and robustness of decentralized systems.

ZKML also has potential use-cases beyond privacy-preserving machine learning training. For example, Zero Knowledge proofs can be used for verifying the outputs or computations of machine learning algorithms. It can serve as a tool for secure multi-party computation, where different parties want to jointly compute a function on their inputs while keeping those inputs private. These advanced techniques enable private yet auditable computations, where one can verify the correctness of a computation without seeing the underlying data.

However, ZKML is not without its challenges. The computation of zero-knowledge proofs, particularly for complex machine learning models, can be computationally intensive and time-consuming. Achieving the necessary balance between privacy, accuracy, and computational efficiency is a significant technical challenge in ZKML.

Moreover, the successful deployment of ZKML requires not just technical solutions but also careful attention to issues of trust, transparency, and governance. Users need to understand and trust the system, and clear rules need to be established for how data is used, accessed, and controlled.

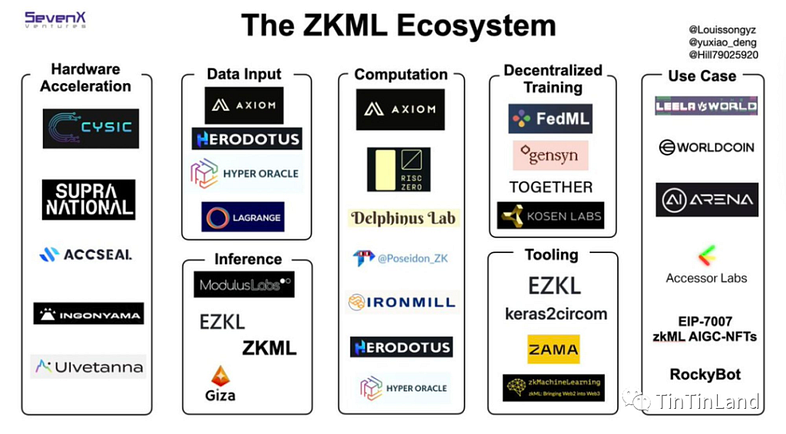

ZKML is a promising technology that combines the strengths of machine learning, cryptography, and decentralized systems. While it poses considerable challenges, its potential to transform data privacy and security is immense and worthy of further exploration. Currently in Web3, there are several projects attempting to build at the intersection of blockchain, zero knowledge and machine learning including Modulus Labs, ZKonduit, Gensyn as well as several academic research institutions contributing evolving thought leadership in this space.

Let’s double click on a few areas.

A. Advanced Cryptography in ZKML

The essence of ZKML lies in its cryptographic principles. Zero-Knowledge Proofs (ZKP) are advanced cryptographic protocols allowing one party to prove to another that a given statement is true without conveying any additional information. ZKPs are integral to ZKML, serving as the instrument that allows computations on private data.

More advanced forms of ZKPs such as zk-SNARKs (Zero-Knowledge Succinct Non-Interactive Argument of Knowledge) and zk-STARKs (Zero-Knowledge Scalable Transparent Arguments of Knowledge) enable the execution of complex computations efficiently and securely. These can prove to be significant in the realm of machine learning where computations can be highly intensive.

B. Decentralization and Network Design in ZKML

Decentralization is another key aspect of ZKML. This refers to the dispersion and distribution of functions and powers away from a central authority. In a ZKML environment, data is spread across different nodes in a network, creating a decentralized system. Decentralization seeks to reduce the risk of data loss and increases resilience to attacks or system failures.

The architecture of these decentralized networks is crucial for ensuring robustness and efficient performance. Blockchain and Distributed Ledger Technology (DLT) often form the backbone of such decentralized systems due to their inherent security, transparency, and immutability.

C. Machine Learning and ZKML

ZKML leverages ML models that learn and make decisions or predictions based on data. However, these ML models are unique in that they operate on zero-knowledge proofs of the data rather than the data itself. This enables ZKML to perform data analyses, learning, and prediction tasks without ever exposing the underlying data.

The types of machine learning models that can be applied in ZKML are diverse, including but not limited to supervised learning models, unsupervised learning models, and reinforcement learning models. However, adapting these models to work in a zero-knowledge context is a significant research challenge to date.

D. Trust and Governance in ZKML

While the technical aspects of ZKML are important, the issue of trust and governance is equally critical. Users need to trust the ZKML system, understand how it works, and believe in its ability to protect their data privacy. Clear policies and regulations must be established regarding data access, use, and control.

ZKML is a multidisciplinary technology that combines machine learning, advanced cryptography, and decentralization. Despite the challenges it presents, we believe its potential to revolutionize data privacy, security, and machine learning processes makes it an exciting field of exploration. Let’s go deeper into these topics and provide examples of viable use cases.

Implications for Decentralized Finance (DeFi)

Decentralized Finance, or DeFi, is a rapidly expanding field that is reshaping the global financial system. By leveraging blockchain technology, DeFi applications provide services like lending, borrowing, and trading without the need for intermediaries. Decentralized zero-knowledge machine learning (ZKML) has the potential to significantly enhance privacy, security, and efficiency in the DeFi ecosystem.

The potential implications of ZKML for DeFi are vast. Financial data is among the most sensitive information, and protecting it is critical. The advent of DeFi has seen an explosion of data generated from various decentralized financial applications. ZKML seeks to help DeFi platforms leverage this data without compromising user privacy.

By employing ZKML, DeFi platforms can potentially provide personalized recommendations, enhance risk assessment models, or fine-tune their algorithms based on user behavior, all while maintaining the privacy of their users. Moreover, it can enhance security, as sensitive data remains encrypted, potentially reducing the risk of data breaches.

A primary advantage of DeFi, in our opinion, is the democratization of financial services. However, maintaining privacy while participating in these decentralized networks can be challenging. The use of ZKML in DeFi could enable participants to prove certain properties about their data, such as their creditworthiness, without revealing the data itself.

For example, a borrower on a DeFi lending platform could use ZKML to demonstrate that they meet the necessary lending criteria without revealing sensitive financial information. Similarly, ZKML could allow investors to prove that they meet accreditation standards without disclosing their exact financial status.

Beyond privacy, ZKML can enhance the security and transparency of DeFi applications. Since financial transactions inherently involve sensitive data, utilizing ZKML could protect this data from potential breaches. Furthermore, the use of zero-knowledge proofs could make transactions more transparent, as they seek to provide an indisputable verification of the data’s properties without revealing the data itself.

ZKML could also lead to significant efficiency improvements in DeFi. Traditional financial systems often require complex and time-consuming verification processes. By contrast, zero-knowledge proofs can provide instant, cryptographically secure verification. This could streamline processes like loan approvals, identity verification, and compliance checks.

Despite these advantages, integrating ZKML into DeFi is currently very challenging. One of the main hurdles is the computational complexity of zero-knowledge proofs, which requires substantial resources, particularly for large datasets in existing financial databases. The efficiency and scalability of ZKML are thus critical areas for ongoing research and development.

There are also broader challenges associated with trust, user understanding, and regulation. Users need to trust the ZKML system and understand how it protects their privacy and data. Meanwhile, regulators need to understand the technology to develop appropriate regulatory frameworks while also not inadvertently stifling innovation.

Enhanced Privacy for DeFi Users:

One of the major challenges in DeFi is striking a balance between transparency, a cornerstone of blockchain technology, and privacy, a key user requirement. The use of ZKML could enhance user privacy by allowing DeFi applications to verify transaction details without disclosing the involved parties or the transaction amounts.

In more specific terms, ZKML could potentially enable DeFi users to conduct private transactions over public blockchains, ensure compliance with AML and KYC regulations without revealing unnecessary details, and protect user identities in public lending and borrowing markets.

Securing DeFi Protocols:

The open nature of DeFi protocols makes them targets for various forms of attacks, such as front-running and MEV ‘sandwich’ attacks. ZKML can help secure these protocols by enabling transactions to be verified and included in blocks without revealing their details until after they’ve been confirmed. This can potentially prevent front-running attacks where malicious actors observe pending transactions and try to exploit them.

Furthermore, ZKML could be used in DeFi insurance applications to validate claims without revealing sensitive information about the claimant or the claim, thus preserving privacy and preventing potential fraudulent activities. Additional use cases include secured and unsecured loans market, mortgages, revolving credit on-chain and more.

Improving Efficiency in DeFi Systems:

Efficiency is a critical aspect in any financial system, decentralized or otherwise. By enabling instant verification of transactions and faster consensus algorithms, ZKML can significantly enhance the efficiency of DeFi systems. This could potentially lead to more rapid finalization of transactions, improving user experience and increasing the overall throughput of DeFi systems.

Economic Models and Incentives:

The integration of ZKML into DeFi can also impact economic models and incentives. For instance, it can enable new types of private, decentralized exchanges where users can trade directly with each other without revealing their trades to the public. This could encourage more participation in DeFi by providing a greater level of privacy and security.

Regulatory Implications:

While ZKML presents many potential opportunities for enhancing DeFi, it also introduces new challenges for regulators. Current financial regulations depend on transparency, but the introduction of zero-knowledge proofs complicates this as they allow for privacy on public blockchains.

Regulators must therefore evolve their strategies to maintain oversight while respecting the privacy benefits introduced by ZKML. This could involve using zero-knowledge proofs themselves to verify compliance without infringing upon user privacy.

While ZKML offers substantial benefits for DeFi in terms of privacy, security, efficiency, and economic modeling, it also presents significant challenges, especially from a regulatory perspective. The further exploration of these areas can contribute greatly to the maturation and development of DeFi platforms.

Impact on User-Generated Content (UGC)

With the exponential growth of user-generated content (UGC), privacy and content ownership have become critical concerns. ZKML can play a significant role in addressing these issues in decentralized platforms.

Firstly, ZKML can enable platforms to analyze user behavior and content preferences without compromising user privacy, leading to a more personalized and engaging user experience. Secondly, it can provide a robust solution to preserve content ownership. Artists and creators can use zero-knowledge proofs to prove ownership of their content without revealing the content itself.

As digital platforms evolve and grow, user-generated content has become a critical factor driving their success. From social media posts and online reviews to amateur videos and open-source software, user-generated content fuels the Internet’s dynamism. However, issues of privacy, control, and monetization of this content are increasingly pressing. Integrating ZKML into platforms that host user-generated content could revolutionize how these concerns are addressed.

Most digital platforms today operate under a model where users provide content, and the platform collects, stores, and controls this content. This setup often leaves users with little say over how their content is used, who can access it, and how (or if) they are compensated for it.

By using ZKML, platforms could allow users to maintain control over their content while still enabling its use for things like personalization, recommendation, and ad targeting. Users could provide proofs about their content (e.g., topics, sentiment, demographics) without revealing the content itself. This could allow for more granular control over who can access what information about the content and under what circumstances.

For instance, a user could prove that their blog post is about cooking without revealing the specific recipes included in the post. This could allow them to control who has access to their unique recipes while still contributing to the overall cooking content ecosystem.

In addition to seeking to enhance privacy, this could open up new possibilities for monetizing user-generated content. Users could potentially sell access to certain aspects of their content while keeping others private. This could lead to more equitable content ecosystems where users share in the value generated by their content.

However, ZKML’s application in the context of user-generated content also presents challenges. Technically, implementing ZKML in this way would require creating efficient, user-friendly systems that can handle vast amounts of diverse content. There would also need to be clear, understandable explanations of how the system works and what it means for users’ privacy and control over their content.

Trust is another crucial factor. Users must trust the ZKML system and believe that their content remains private when they’re told it does. Achieving this trust would likely require third-party audits, transparency about system operations, and possibly regulatory oversight.

ZKML’s potential impact on user-generated content, especially in the metaverse is significant. Although it presents formidable technical and trust-related challenges, the promise of a more private, user-controlled, and equitable content ecosystem makes it an exciting area for exploration.

Decentralized Compute and Analytics

Decentralized computation and analytics refer to the idea of executing computations or analytics tasks in a decentralized network. ZKML plays a pivotal role in preserving data privacy in this scenario.

In a traditional centralized model, raw data would be sent to a central server for processing. However, ZKML can perform these tasks without the need to expose the raw data. This is particularly useful for privacy-centric applications where disclosing raw data to a third-party compute provider is not an option.

It’s important to note that the real power of ZKML in decentralized compute and analytics lies in the potential for collaborative machine learning. Multiple entities can jointly train machine learning models on their combined data, without having to share the data itself. This way, institutions seek to leverage the full potential of their collective data while strictly maintaining data privacy.

Decentralized computing and analytics represent a significant paradigm shift from traditional centralized systems. They seek to offer increased privacy, enhanced security, and the potential for greater democratization of data. However, they also introduce new challenges. The application of ZKML to these decentralized systems could significantly alter how we approach computation and analytics.

In a traditional centralized system, data is collected, stored, and analyzed in a single location or a small number of locations. This can create a single point of failure, expose the system to potential attacks, and result in the concentration of power and control over the data in the hands of a few entities. Decentralized systems aim to overcome these issues by spreading data and computation across multiple nodes in a network.

With decentralized compute, data can remain at its source or be distributed across a network, which seeks to reduce the risk of central points of failure and potential data breaches. Analytics can then be conducted on this distributed data without the need for it to be centralized. This is where ZKML comes into play.

ZKML can help enable machine learning models to be trained and applied on data distributed across the network, without needing the data to be revealed or centralized. This can enable rich, complex analytics to be conducted while preserving the privacy and security of the data. The team at Gensyn is attempting to tackle this problem and have raised a substantial amount of financing to push this forward with a world class Founding team.

This could have profound implications for many sectors. For instance, in healthcare, a global network of hospitals could collectively train a machine learning model on patient data to predict disease outcomes without ever sharing sensitive patient information.

In the financial sector, banks could conduct fraud detection analytics across their entire network of customers without exposing sensitive financial data. And in retail, businesses could gain insights into customer behavior across a wide range of locations and channels without compromising customer privacy.

However, implementing decentralized compute and analytics with ZKML also brings significant challenges. These include the technical complexity of designing and implementing effective ZKML algorithms, ensuring the accuracy and quality of distributed data, and managing the computational resources required to process large datasets in a decentralized manner.

There are also trust and governance issues to consider. Establishing trust in a decentralized network and its data can be difficult, particularly when the participants in the network do not necessarily trust each other. And the governance of such networks, including decisions about data use and access, can be complex and contentious. Overall, while the path towards decentralized compute and analytics with ZKML is fraught with challenges to date, it also offers a potentially promising direction for the future of computation and data analytics.

Regulatory Compliance and Auditability

One of the more compelling applications of ZKML is in regulatory compliance and auditability. In the current regulatory environment, organizations often need to demonstrate compliance with various data protection laws. ZKML could potentially allow an entity to prove compliance without revealing any actual data, thereby complying with regulations without sacrificing privacy.

For instance, a financial institution could prove that it has performed the necessary anti-money laundering checks, or that it is appropriately managing credit risk, without exposing individual customer data. This has the potential to revolutionize how audits are conducted, making them more efficient, effective, and secure.

Regulatory compliance and auditability are crucial for businesses across industries, particularly those handling sensitive data. In this context, ZKML offers a groundbreaking approach to demonstrating compliance without exposing underlying data.

Typically, demonstrating compliance involves revealing data to regulatory bodies, auditors, or third-party assessors. This process, while necessary, can compromise the privacy and security of sensitive data. The beauty of ZKML is that it helps enable entities to verify the truth of certain claims about their data without revealing the data itself.

For instance, let’s take the example of a bank needing to prove it is adhering to anti-money laundering (AML) regulations. AML procedures involve complex data analysis, looking for patterns and links that might suggest illegal activity. With traditional methods, demonstrating compliance would require revealing sensitive customer data to regulators. But, with ZKML, the bank could use a zero-knowledge proof to demonstrate that it has carried out the required checks without exposing any customer information.

This could even extend to real-time compliance monitoring. Regulators could verify ongoing compliance without constant access to sensitive data, increasing the efficiency of regulatory oversight and reducing the security risks associated with data transfer.

The same principle could be applied across a variety of other regulatory areas — from data protection regulations like GDPR, to industry-specific rules like HIPAA in healthcare. The ability to prove compliance without revealing sensitive information represents a paradigm shift in the approach to regulatory audits, potentially paving the way for a more secure and efficient compliance environment.

Despite these potential advantages, the implementation of ZKML in regulatory compliance and auditability also poses challenges. The development and adoption of standards for zero-knowledge proofs in a regulatory context is a significant hurdle. This involves not just technical considerations, but also legal and policy issues. Regulators and auditees alike need to trust and understand the technology for it to be effective.

Furthermore, the computational complexity of zero-knowledge proofs means that significant computational resources may be required, particularly for large datasets. Ongoing research and development are needed to optimize these processes and make them more accessible and practical for widespread use.

Personalized Experiences without Privacy Trade-offs

Decentralized systems often aim to deliver personalized user experiences, which traditionally relies on access to user data. With ZKML, personalization can be achieved without compromising on user privacy. Machine learning models could learn from user behavior and deliver personalized content or recommendations, all while maintaining the privacy of user data. This could be particularly impactful in sectors such as e-commerce, entertainment, and digital advertising.

Traditionally, personalization and privacy have been considered trade-offs in the digital world. The more personalized the user experience, the more data the service provider typically needs to access, analyze, and store. ZKML has the potential to disrupt this dynamic and create personalized experiences without compromising privacy.

In an age where consumers expect personalized interactions, businesses across industries are constantly looking for ways to tailor their services to individual needs. This can range from personalized product recommendations in e-commerce, to customized news feeds on social media platforms, to individual health insights from wearable devices.

To deliver this personalization, machine learning algorithms are typically used to analyze a wealth of user data and identify patterns, trends, and preferences. However, this approach requires access to sensitive user data and could compromise user privacy, especially if the data is mishandled or misused.

This is where ZKML can be a potential game-changer. By enabling machine learning models to learn from data without actually accessing the raw data, ZKML seeks to offer a path to personalization that doesn’t involve compromising user privacy.

For example, consider a streaming platform that wants to recommend personalized content to its users. Traditionally, the platform would need to analyze a user’s watch history, ratings, and other interactions to make these recommendations. However, with ZKML, the platform could generate accurate recommendations without ever accessing the user’s watch history or other sensitive data.

Furthermore, ZKML also supports multi-party computation, allowing multiple entities to jointly analyze their combined data without sharing it with each other. This could enable even more personalized experiences by incorporating data from various sources, all while preserving privacy.

However, implementing ZKML for personalized experiences is not without challenges. Ensuring the accuracy and relevance of personalized content without accessing raw data can be difficult. The success of ZKML in this regard will largely depend on the efficiency and effectiveness of the zero-knowledge proof algorithms used, as well as the machine learning models that are trained on this data.

Moreover, it’s essential to consider the ethical implications and potential for misuse. Just because data is anonymized and secure doesn’t mean it’s always ethical to use it for personalization. Companies will need to ensure they are transparent with users about how their data is being used and give users control over their data, even if it’s being used in a privacy-preserving manner.

Democratic Control Over Data

ZKML also has the potential to democratize control over data. In a decentralized system, each user typically has control over their own data. However, this control is often limited by the need to share data for processing or analysis. With ZKML, users could potentially benefit from data processing without having to relinquish control over their data. They can prove certain facts about their data without revealing the data itself.

Data has often been called the “new oil” due to its immense value in the modern world. ZKML can play a transformative role in shifting this paradigm, potentially enabling more democratic control over data.

In most current systems, the control and use of data is centralized. Social media platforms, for instance, have near-total control over user data, using it for everything from ad targeting to content recommendation algorithms. In these scenarios, users are usually left with little control over their own data and few means to verify how it’s used.

Decentralized systems aim to change this by giving users control over their own data. But true control goes beyond just deciding whether or not to share data. Users also need to control how their data is used after it’s shared. ZKML can help achieve this finer level of control.

Moreover, ZKML could also enable users to track how their data is used after it’s shared. Users could encrypt their data with a key that also records each time the data is accessed or used, providing a secure and transparent audit trail.

Implementing this level of democratic data control involves significant challenges. User-friendly interfaces and education are critical to ensure that users understand and can exercise their data rights. Moreover, robust legal frameworks might be required to enforce these rights and hold data users accountable.

The technical challenges involved in ZKML — such as computational complexity and ensuring data accuracy — are also relevant here as with most use cases outlined above. However, if these hurdles can be overcome, ZKML could play a key role in shifting the data control balance back towards individuals, enabling a more democratic and privacy-preserving digital ecosystem. The team at Worldcoin is working on this particular use case as well with over $100M of fresh funding from top venture capital firms to tackle this ambitious challenge.

Addressing the Challenges of ZKML

As mentioned previously, there are significant challenges to be addressed in implementing ZKML, particularly in a decentralized context. The computational complexity of zero-knowledge proofs and the scalability of machine learning algorithms are notable issues.

Optimization of zero-knowledge proofs and development of more efficient algorithms are areas of active research. Furthermore, the development of distributed machine learning algorithms that can operate effectively in a decentralized network is critical. Such algorithms would need to be capable of learning from distributed data without needing to aggregate it in a central location.

While the potential of ZKML in a decentralized context is considerable, realizing this potential comes with significant challenges. These range from technical and computational issues to questions around trust, understanding, and standardization.

One of the primary technical challenges involves the computational complexity of zero-knowledge proofs. These proofs often require considerable computational resources, which can be a limiting factor, particularly for large datasets. The development of more efficient zero-knowledge proof algorithms is an area of active research, and progress in this domain will be critical for the widespread adoption of ZKML.

Scalability of machine learning algorithms in a decentralized context is another significant challenge. Traditional machine learning models often rely on centralized data and computation, which doesn’t translate well to a decentralized context. Developing distributed machine learning algorithms that can learn effectively from decentralized data without requiring data aggregation is a complex task.

Trust and understanding are also significant challenges. For users to feel comfortable with ZKML, they need to trust that their data is indeed private and secure. This trust depends not only on the robustness of the zero-knowledge proofs and machine learning algorithms, but also on transparent and comprehensible communication about how these technologies work.

For businesses and regulators, the challenge is to understand the implications of ZKML. This includes understanding how to integrate ZKML into existing systems and workflows, how to regulate its use, and how to audit its implementation. Development and adoption of standards for zero-knowledge proofs in different contexts will be essential for addressing these challenges.

Addressing these challenges is a complex task that requires cooperation and collaboration across different domains. Technologists, businesses, regulators, and users all have a role to play in developing and implementing solutions. However, despite these challenges, the potential benefits of ZKML make it a promising area for continued research and development.

Future Outlook

Despite these challenges, we believe the potential of ZKML in the context of decentralized systems is immense. As research progresses and solutions to these challenges are developed, we can expect to see more widespread adoption of ZKML in various sectors.

The combination of privacy-preserving machine learning and decentralized control over data could usher in a new era of secure, personalized digital experiences. In the future, this technology could transform how we interact with digital platforms, shaping a digital environment where privacy and personalization are not mutually exclusive but are instead two sides of the same coin.

The promise of decentralized zero-knowledge machine learning is immense. As we push the frontiers of what’s possible in privacy-preserving computation, we believe we’re likely to see a proliferation of use cases and applications that are currently hard to envision.

We believe the first wave of adoption is likely to be in industries with high-stakes data privacy requirements. These could include finance, healthcare, and industries where intellectual property is closely guarded as mentioned in the above sections. The ability to perform computations and derive insights from data without exposing the data itself would be an asset in these scenarios, while combined with cost effective machine learning and neural networks.

As we’ve discussed, we believe one major application could be in compliance and auditing. The potential for real-time, privacy-preserving auditing could streamline regulatory processes and reduce risks associated with data breaches.

In the longer term, we may see the emergence of entirely new business models based on ZKML. Today, many online business models rely on collecting and monetizing user data, often at the expense of privacy. We believe ZKML could enable business models that deliver personalized services and monetize interactions without ever accessing sensitive user data.

Another possible direction is the integration of ZKML with other advanced technologies. For instance, ZKML could be combined with secure multi-party computation, quantum-resistant cryptography, or other advanced privacy-preserving techniques to create even more secure and private computational systems.

However, the future of ZKML also hinges on how effectively we can address the associated challenges. This includes not only the technical challenges of developing efficient ZKML algorithms and systems, but also the broader challenges of building trust, understanding, and regulatory frameworks around these technologies. This will take a concentrated and collaborative effort from various stakeholders including public and private support, continued academic research and consistent institutional proof of concepts.

In the coming years, we’re likely to see a rapid evolution in the ZKML landscape. We believe it’s an exciting field, and one that holds great promise for the future of privacy-preserving computation and data usage. The investment team at Struck Crypto is closely monitoring this evolving technology and speaking to top technical Founders in this vertical on a consistent basis as part of our thesis driven outlook.

Key Sources

- Daniel Shorr, Modulus Labs

- ZKonduit, Gensyn

- BlockTempo

- CoinTelegraph

- Worldcoin: https://worldcoin.org/blog/engineering/intro-to-zkml

- Modulus Labs Research: https://drive.google.com/file/d/1tylpowpaqcOhKQtYolPlqvx6R2Gv4IzE/view

- Aleo: https://www.aleo.org/post/zkml-irl-practical-use-cases-for-more-secure-models

Special thanks to Oliver H, Daniel S, and Leo L. for review and comments. This thought piece is intended for a general business and technology audience at an intermediate level of understanding of Web3 and technology evolution as a prerequisite. All thoughts and additional comments welcomed.

Disclaimer

The information provided in this blog post is for educational and informational purposes only and is not intended to be investment advice or a recommendation. Struck has no obligation to update, modify, or amend the contents of this blog post nor to notify readers in the event that any information, opinion, forecast or estimate changes or subsequently becomes inaccurate or outdated. In addition, certain information contained herein has been obtained from third party sources and has not been independently verified by Struck. The company featured in this blog post is for illustrative purposes only, has been selected in order to provide an example of the types of investments made by Struck that fit the theme of this blog post and is not representative of all Struck portfolio companies.

Struck Capital Management LLC is registered with the United States Securities and Exchange Commission (“SEC”) as a Registered Investment Adviser (“RIA”). Nothing in this communication should be considered a specific recommendation to buy, sell, or hold a particular security or investment. Past performance of an investment does not guarantee future results. All investments carry risk, including loss of principal.